When Google's Bard AI is Just a Strangerasked any question about Israel and Palestine, the chatbot basically shuts down. The questions can be anything from the seemingly innocent — ("Where is Israel?") — to those centered around the ongoing Israel-Hamas war. To these, Bard responds to each of these inquiries with a variation of the message: "I'm a text-based AI and can't assist with that."

Google's chatbot appears to be censoring any answer related to the current crisis. This applies to a variety of questions we and many users have asked using keywords including Israel, Gaza, Palestine, and the IDF (the abbreviation for the Israel Defense Forces). Bard's responses to us included: "I'm a language model and don't have the capacity to help with that."

Credit: Screenshot / Google

Credit: Screenshot / Google  Credit: Screenshot / Google

Credit: Screenshot / Google Users on X first pointed out this issue, also displaying that the chatbot would easily answer questions about other countries, regions, and locations. Google Bard also responds to questions about other ongoing global conflicts, such as the war in Ukraine — when asked to explain this conflict, Bard provided this information: "In February 2022, Russia launched a full-scale invasion of Ukraine. The invasion was met with international condemnation and led to the imposition of severe sanctions on Russia."

Credit: Screenshot / Google

Credit: Screenshot / Google Mashable reached out to Google for comment and received confirmation from the company's press team that it has "implemented temporary guardrails to disable Bard’s responses to associated queries." The statement reads:

"Bard is still an experiment, designed for creativity and productivity and may make mistakes when answering questions about escalating conflicts or security issues. Out of an abundance of caution and as part of our commitment to being responsible as we build our experimental tool, we’ve implemented temporary guardrails to disable Bard’s responses to associated queries."

In a blog post from March 2023, Google VPs shared thoughts on the "experiment" Bard and also cautioned users that the technology, while large language models (LLMs) are exciting, "they’re not without their faults." The statement reiterated that because such models learn from "a wide range of information" they are susceptible to reflecting "real-world biases and stereotypes."

People have also brought into question the answers provided by OpenAI's ChatGPT to certain prompts. Mashable tried asking questions to GPT-3.5, the Default version. In response to questions about the history between Israel and Palestine, the chatbot appears to provide detailed information, but underscores that its knowledge may be outdated: "Please note that the situation can change rapidly, and it is essential to consult up-to-date news sources for the latest information on the Israel-Hamas conflict, as developments may have occurred since my last knowledge update in January 2022," read one response to the question "what is happening between israel and hamas?".

To the question "can you tell me what's happening in palestine?", the chatbot again stated its information may be outdated and provided a mostly vague answer, also directing users to read "reputable news sources" instead (we can get behind that advice):

I apologize, but I am not able to provide real-time information as my knowledge was last updated in 2022. As of my last update, the situation in Palestine has long been a complex and sensitive issue, characterized by ongoing conflicts and disputes between the Palestinian territories and Israel. The conflict has deep historical and political roots, and it has resulted in significant humanitarian issues and human rights concerns, including displacement, violence, and loss of life on both sides. Efforts to find a lasting resolution have involved numerous peace negotiations, international interventions, and agreements, but a comprehensive and long-term solution has remained elusive. The issues involved encompass various elements, including the status of Jerusalem, Israeli settlements, Palestinian statehood, security concerns, and the rights of refugees, among others. For the most recent and accurate updates on the situation in Palestine, I recommend checking reputable news sources, such as international news agencies and organizations that provide coverage on the region.

People across the internet have experimented with the responses provided by AI-powered chatbots about the war. For instance, writer and illustrator Mona Chalabi posted the significant difference in the answers ChatGPT gave to the questions "Do Israelis deserve justice?" and "Do Palestinians deserve justice?". The answer to the former was far more concrete, while the answer to the latter stated that "the question of justice for Palestinians is a complex and highly debated issue". Others have asked the same questions and received a similar disparity.

Mashable has reached out to OpenAI for comment and will update this story if we hear back.

OpenAI has previously insisted that its model is impartial, but recent research has shown that AI models do, in fact, hold bias. On r/ChatGPT, a Reddit community dedicated to discussion about AI, the intersection of ChatGPT and Israel-Palestine was discussed in a since-deleted post. The comments remain, however, and one user responded: "People are biased, therefore ChatGPT will be biased."

Since the beginning of the Israel-Hamas war in October, online platforms have been widely questioned by users for their actions. Companies owned by Meta, including Instagram and Facebook, have been accused of shadowbanning content and other incidents of glaring bias. X and TikTok have come under similar scrutiny for moderation policies or the spread of disinformation.

Topics Artificial Intelligence Google Politics ChatGPT

Boeing's new VR simulator immerses astronauts in space training

Boeing's new VR simulator immerses astronauts in space training

You Are on Display: An Interview with Morgan Parker

You Are on Display: An Interview with Morgan Parker

“Infinite Mischief”: Elizabeth Bishop’s Rebuke to Robert Lowell

“Infinite Mischief”: Elizabeth Bishop’s Rebuke to Robert Lowell

Headwinds and Tailwinds: Why We Underestimate the Role of Luck

Headwinds and Tailwinds: Why We Underestimate the Role of Luck

Portraits and Perennials: Art by Robert Kushner

Portraits and Perennials: Art by Robert Kushner

Real Polaroids, Fake People: Duane Hanson’s Photos of His Lifelike Sculptures

Real Polaroids, Fake People: Duane Hanson’s Photos of His Lifelike Sculptures

Charlotte Brontë’s Favorite Critic

Charlotte Brontë’s Favorite Critic

The Sound and the “Furious”

The Sound and the “Furious”

Nan Goldin: Photography Is “a Chance to Touch Someone with a Camera”

Nan Goldin: Photography Is “a Chance to Touch Someone with a Camera”

Apple is actively looking at AI search for Safari

Apple is actively looking at AI search for Safari

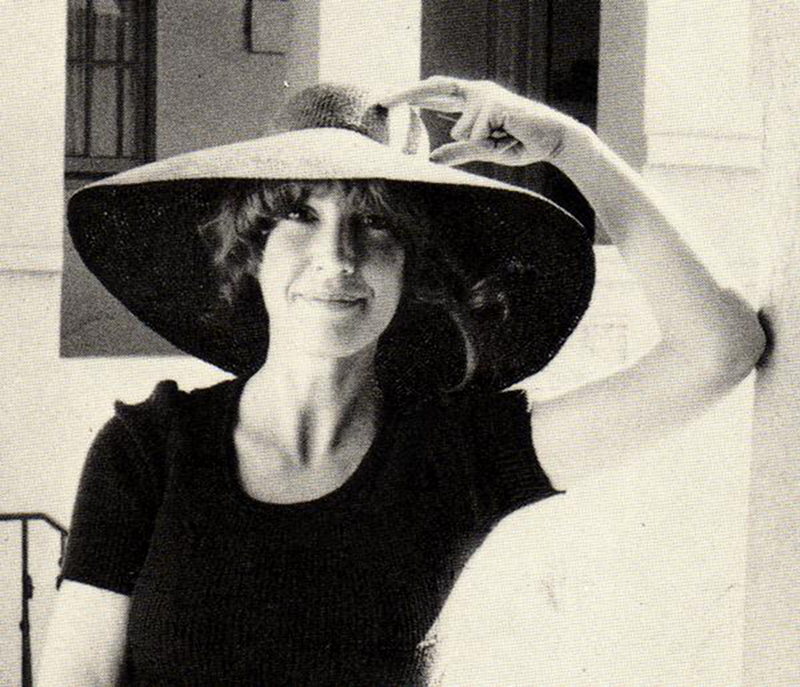

Paula Fox, 1923–2017

Paula Fox, 1923–2017

Connecting Walt Whitman and Philip Levine

Connecting Walt Whitman and Philip Levine

Finding Escape in Eve Babitz’s Hollywood

Finding Escape in Eve Babitz’s Hollywood

Arizona’s Beautiful Commitment to Darkness

Arizona’s Beautiful Commitment to Darkness

Harry Mathews, 1930–2017

Harry Mathews, 1930–2017

The Making of a Comics Biography, Part 3

The Making of a Comics Biography, Part 3

Apple is actively looking at AI search for Safari

Apple is actively looking at AI search for Safari

Reading Playboy in Vietnam (For the Articles, of Course)

Reading Playboy in Vietnam (For the Articles, of Course)

How Google is trying to fix Android's emoji problemNASA is making moves to bring supersonic air travel to US skiesJapanese beer giant is recognising employees' sameLego Boost is an awesome robotNetflix picks up new animated series from Matt GroeningNASA is making moves to bring supersonic air travel to US skiesBudding little makeup artist melts hearts during her first tutorialDo Roku and Apple TV really speak film geek? We put voice search to the test for answersPoorly designed tote bag shows exactly why fonts are EXTREMELY importantAre we about to reach peak emoji?Arcade Fire says you can wear whatever you want to their showIndia won't allow driverless cars, says transport chiefMLB player's 2Leslie Jones live'Game of Thrones' Season 7 scrolls: Read Ser Jorah's letter to Daenerys TargaryenIndia won't allow driverless cars, says transport chiefMom talks about that heartbreaking photo of her trans son to fight discriminatory bathroom billT.J. Miller is saying crazy things once again'Game of Thrones': Sam Tarly is going to cure greyscaleSlug lands on woman's face on night out and all hell breaks loose The Duration of 'Vexations' Writing a Memoir of Difficult Women Staff Picks: Fat Ladies, Flowers, and Faraway Lands by The Paris Review Toddlers and Turtles: The 8 best and funniest tweets of the week Instagram is here to stay. So people are changing how they use it. Happy Accidents Travel Snapshots from an Odyssey The Pound Poem That Wasn't By Pound Thelonious Monk and Me Bluesky is now 2 million users strong, with big plans ahead ChatGPT essays and more: How teachers and schools are dealing with AI writing To the Attic: Virginia Woolf and Abelardo Morell Suitcase Full of Candy: An Interview with Svetlana Alexievich Staff Picks: Witch Meta's new AI tool will let you add a dog into every picture Nick Cave slams AI bot ChatGPT: 'A grotesque mockery of what it is to be human' Thorn Vine on the Wall 'Thanksgiving' review: Eli Roth's latest is a gore snore Pleasure Principles: An Interview with Carmen Maria Machado Worst passwords of 2023 include some familiar favorites. See the list.

2.7409s , 10156.7578125 kb

Copyright © 2025 Powered by 【Just a Stranger】,Fresh Information Network